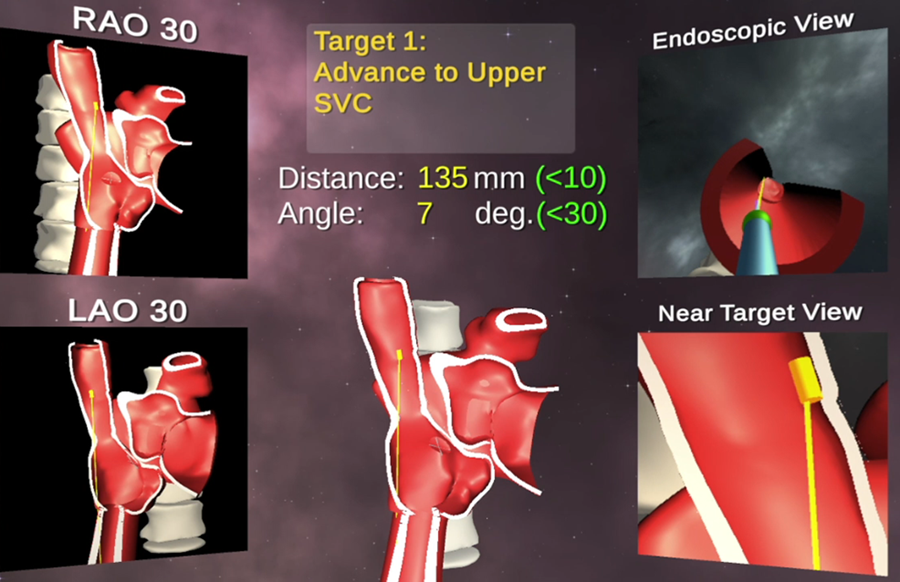

The overall goal of this project is to develop a mixed reality (MR) and deep learning (DL)-based system for intra-operative image guidance for interventional procedures conducted under fluoroscopy. This unique guidance system will offer both a quantitative, and intuitive, method for navigating and visualizing percutaneous interventions by rendering (via MR headset) a true 3D representation of the relative position of a catheter inside a patient’s body using novel DL algorithms for real-time segmentation of fluoroscopy images, and co-registration with 3D-imaging data, such as cone-beam computed tomography (CT), CT or magnetic resonance imaging (MRI).

Percutaneous and minimally invasive procedures are used for many cardiovascular ailments and are typically guided by imaging modalities like x-ray fluoroscopy and echocardiography, which provide real-time imaging. Still, most organs are transparent to fluoroscopy, so contrast agents, which transiently opacify structures of interest, must be used to visualize surrounding tissue. Furthermore, fluoroscopy only provides a 2D projection of the catheter and device, so no information on depth. Echocardiography can directly image anatomic structures and blood flow, so is often used as a complementary imaging modality. Although this can provide useful intra-operative 2D/3D images, it requires skilled operators, and general anesthesia is a pre-requisite for transesophageal echocardiographic guidance. Limits of these techniques increase the complexity of procedures, which often require an interventionalist to determine catheter/device position by analyzing multiple imaging angles and modalities.

The added coordination of different specialties also increases resource utilization/costs. Pre-operative 3D-imaging offers detailed anatomic information that is often displayed on separate screens or overlaid on real-time modalities to improve image-guided interventions. But this method of fusion imaging obstructs the real-time image view during procedures. Furthermore, the images are displayed on 2D screens that fundamentally mitigate the ability to perceive depth and orientation. The lab is focused on solutions to hurdles for creating this system, which includes real-time catheter tracking, co-registration and motion-compensation. The primary components of this system have been demonstrated by the lab on a patient-specific 3D-printed model for training of a transseptal puncture. But clinical use of this system requires the team use more advanced DL-based architectures so that more accurate and rapid processing occurs. The team will optimize its developed software and hardware system to incorporate into a clinical workflow, and to validate the system in an institutional review board (IRB)-approved pilot study. This study will compare cardiac fellows’ performance on a mock procedure using the Mosadegh Lab guidance system versus standard imaging.